Improving Model Performance

Try Multiple Models

On Datature, it is easy to try out different models. The different models we offer vary in several ways, including network architecture complexity, and are suited for different tasks. A large model with a complex architecture might work well for a large dataset, but do poorly on a smaller dataset due to overfitting.

The easiest way to find a suitable model for your dataset is to create several workflows utilizing different models, and check the evaluation metrics to find out which models work better for your dataset.

If inference speed is a concern, you might want to focus on the models with lower complexity, which tend to have faster computation.

Reduce Overfitting

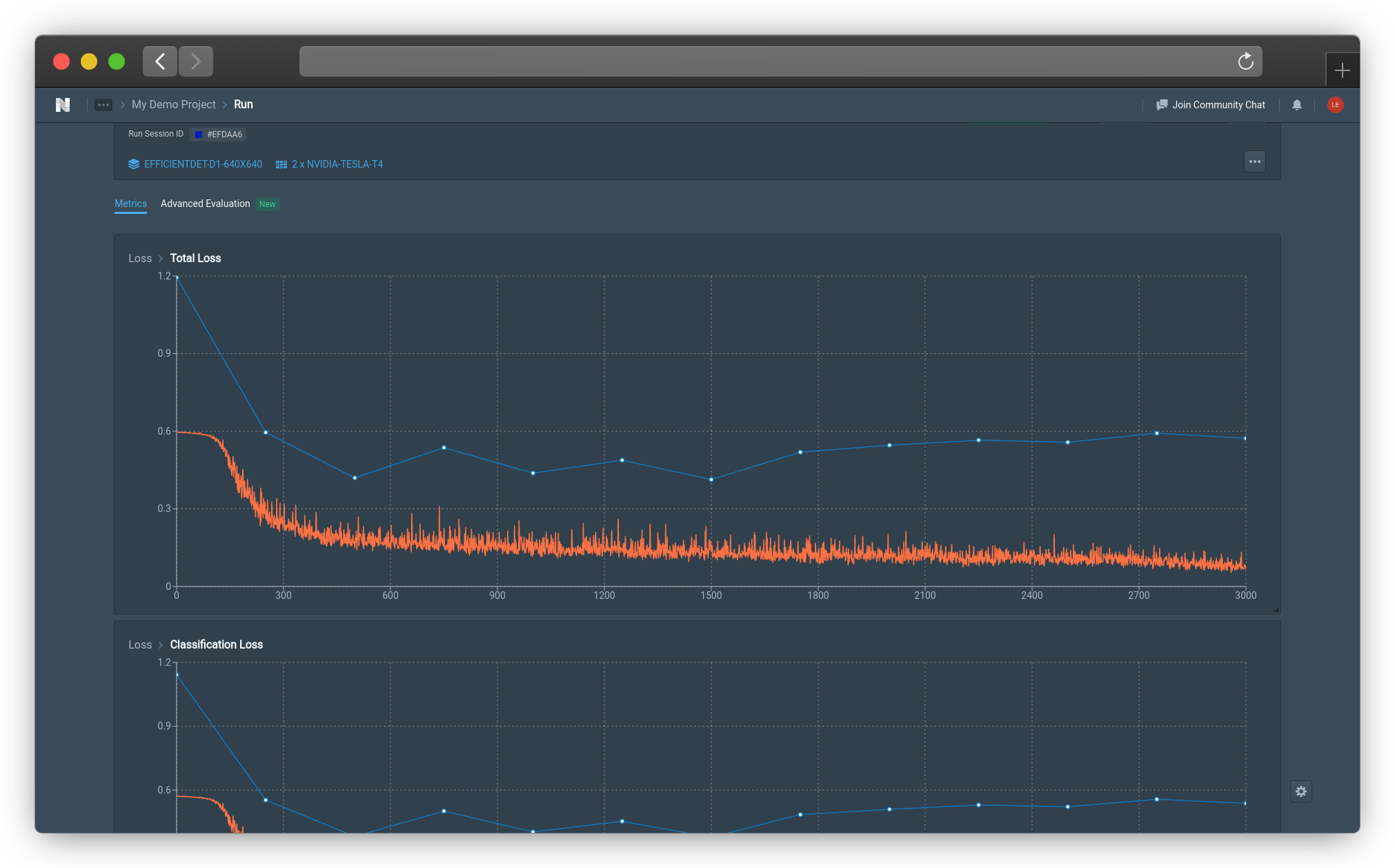

Overfitting occurs when a model learns weights that are too specific to the training data, in essence 'memorizing' the quirks of the training data. An overfit model performs very well on the training data, but performs poorly on unseen data. In the example below, the model has a low Total Loss on the training data (orange line), and a much higher Total Loss on the evaluation data (blue line).

Model Performance Worsening With More Training (Click image to enlarge)

Besides trying multiple models, you can reduce overfitting by

- Augmenting images

- Selecting intermediate checkpoints

- Increasing your batch size

Augmenting Images

Without image augmentation, the model trains on the same data every training epoch, which increases the chance that the model will learn weights specific to this training data. With image augmentation, the model will see slight variations of the training data on every epoch, reducing overfitting.

Image augmentation is a module that can be selected when creating a workflow, read more about it here.

A training step is considered each time model weights are updated, which occurs after every batch of training data is run through the model. Thus, for those more familiar with epochs, the number of training steps can be computed as

Number of Epochs * (Dataset Size/Batch Size) = Number of Steps

Selecting Intermediate Checkpoints

Model performance on the training set should either improve or stay the same over training epochs, but performance on the evaluation set might worsen over training epochs. This can happen if the model starts to overfit after a number of training epochs.

In such a case, if no other models perform better, you can use the weights of the model at step 1,000, since that gives the best performance on the evaluation set. We save the weights of the model at various checkpoints, which you can find in the artifacts section. You can control how the model is saving artifacts in Training Option : Checkpoint Strategy.

Overfitting can be caused by many reasons. A few common causes would be the selection of an overly complex model, the overtraining your model, and lack of training dataset variability. Choosing an appropriate model be fixed by trying multiple models. To keep track of the overtraining, you can decrease the evaluation interval to get more regular updates about your model performance. For lack of training dataset variability, you can add more images to your training dataset or use more complex augmentation.

Underfitting

Underfitting is another common problem in machine learning, and it describes the opposite problem to overfitting, where the model doesn't adapt to the training data very well. The simplest solution to diagnosing such an issue is to try to train the model for longer or lower the batch size. Additionally, besides selecting more appropriate models, users are unlikely to encounter this problem unless they are trying to implement models in very complex cases, such as object detection for a very large number of classes. Users should be wary of the fact machine learning models always perform better with more training examples with fewer outcomes, so where possible, to reach desired accuracy, one should try to reduce the scope as much as possible.

Datature will be adding more customizable features to allow users to better align models and model training with their use cases.

👋 Need help? Contact us via website or email

🚀 Join our Slack Community

💻 For more resources: Blog | GitHub | Tutorial Page

🛠️ Need Technical Assistance? Connect with Datature Experts or chat with us via the chat button below 👇

Updated 6 months ago