Annotation Consensus

What is Annotation Consensus?

Annotation consensus is a method of identifying and reconciling conflicting labels in a dataset. It aims to resolve these conflicts by determining a single label that best represents the true label for a given data point. This can be done via assigning scores to each label, and then selecting the label with the highest score. The scores can be determined by factors such as the level of agreement between multiple annotators labelling the same data point. To learn more about how it is calculated, you can go read our blog on annotation consensus calculation.

How does Consensus Work on Nexus?

We correlate annotations by multiple labellers into groups based on their similarity and then selecting the most representative annotation for each group based on a majority vote. The similarity between annotations is measured using Intersection over Union (IoU), which measures the overlap between two sets of labels. The higher the IoU score, the greater the overlap between the two sets of annotations, which suggests that both annotators agree on the label assigned to a particular object.

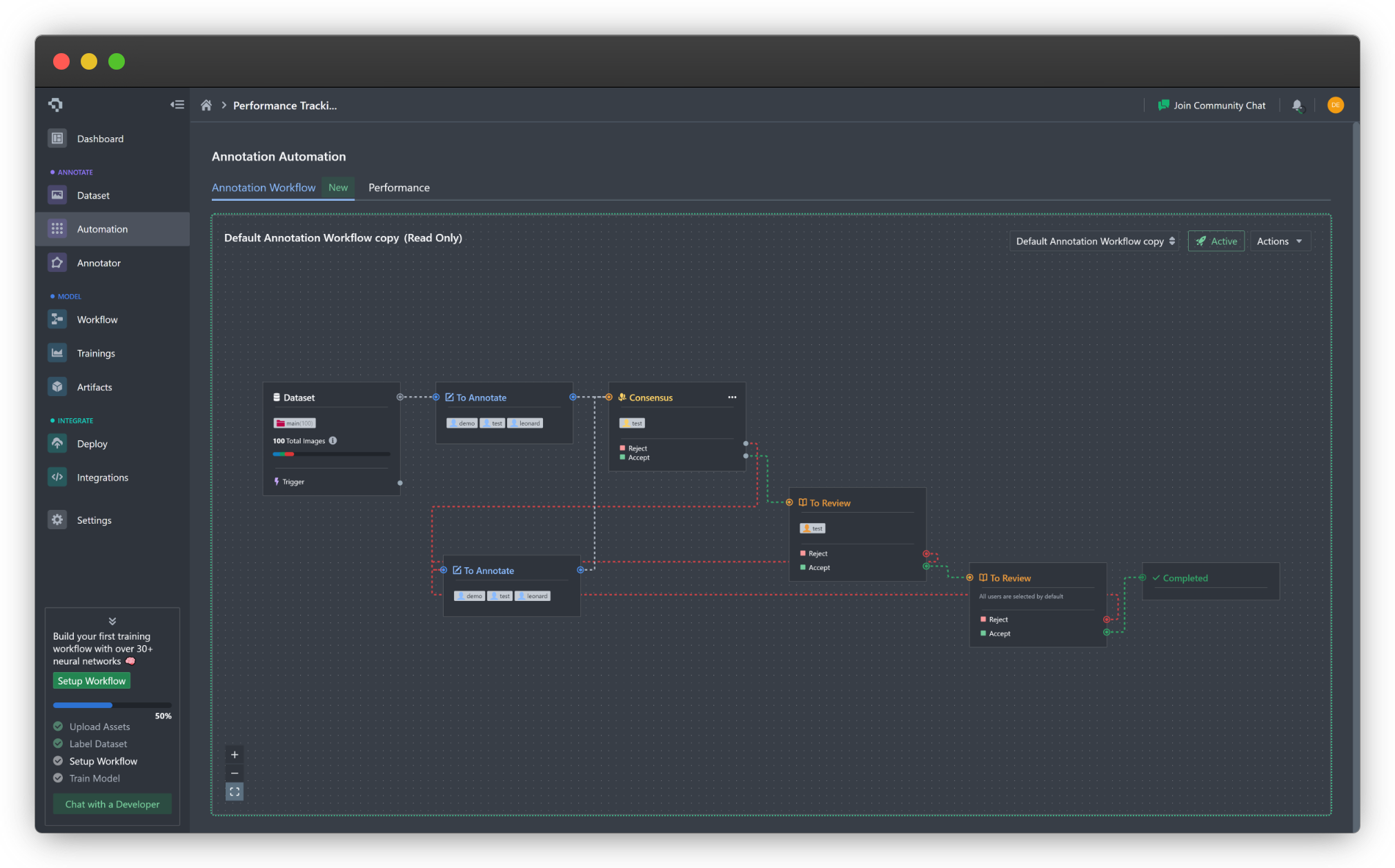

Consensus adds an extra layer of quality assessment to Annotation Automation. It is implemented as a majority voting tool that can be seamlessly incorporated into your annotation workflow right from the start without the need for a trained model. To include consensus in your annotation workflow, simply add a Consensus block after a To Annotate block in your workflow. The Consensus block acts as a preliminary review stage for reconciling multiple sets of annotations, so it must come before any To Review blocks.

Example of an annotation workflow incorporating consensus.

An asset will only be sent for consensus review if there are at least two labellers who have annotated the same asset. If there is only one set of annotations, the asset will bypass the Consensus block and move on to the subsequent stage in your workflow.

You can specify certain users to take on the role of reviewers in the Consensus block. These users will be responsible for reviewing duplicate annotations by multiple labellers and selecting which annotations to accept or reject. All users will be selected by default.

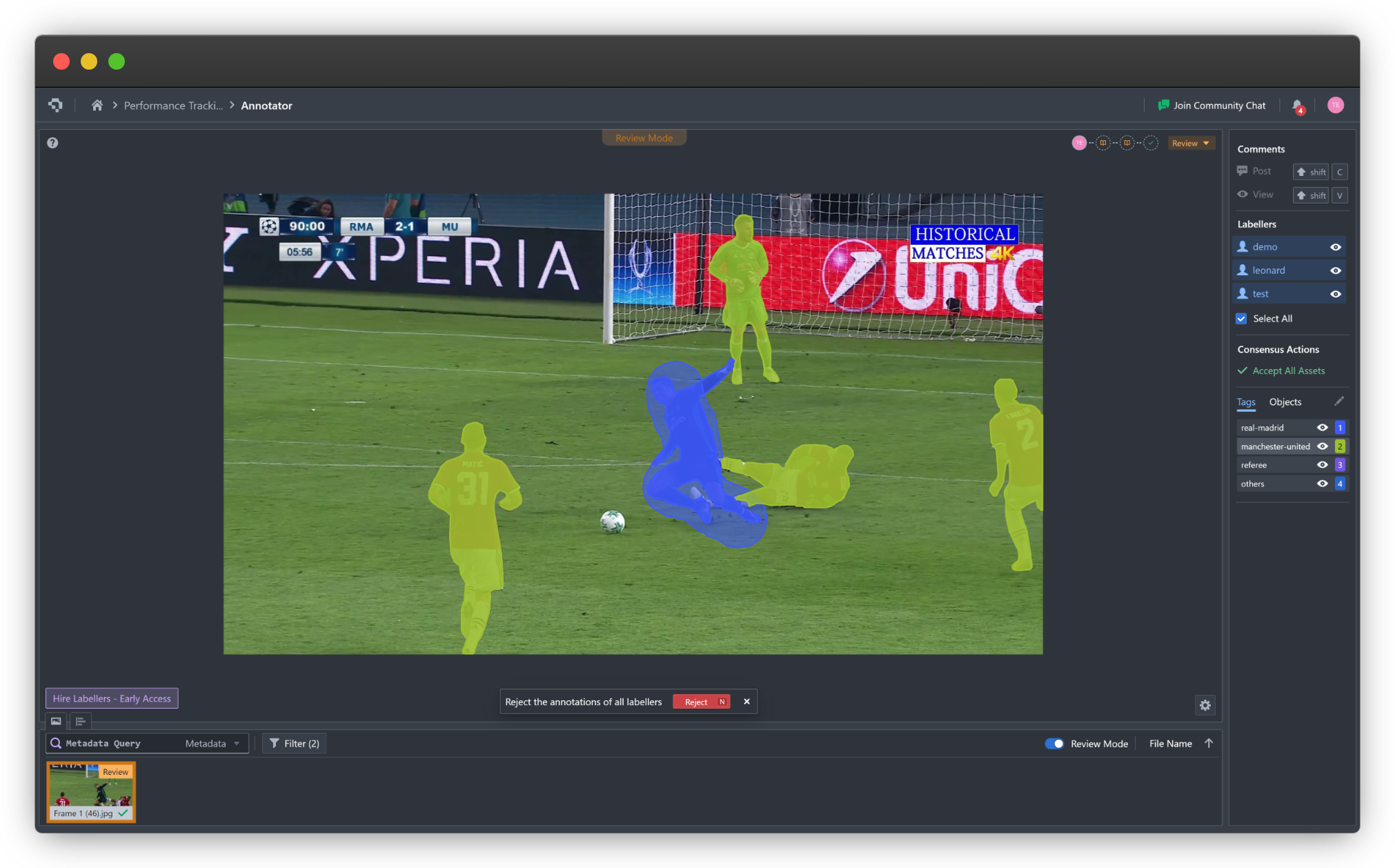

If you are tasked to manage the Consensus block reviews, simply activate Review Mode in the Annotator page. On the right sidebar, you will be able to see a list of all labellers who have annotated the selected asset, together with a few actions. By default, all labellers’ annotations are visible and overlaid on the asset. The default action that is shown below the image when all labellers are selected is to reject all annotations.

Consensus interface when Review Mode is activated. By default, all sets of annotations are visible.

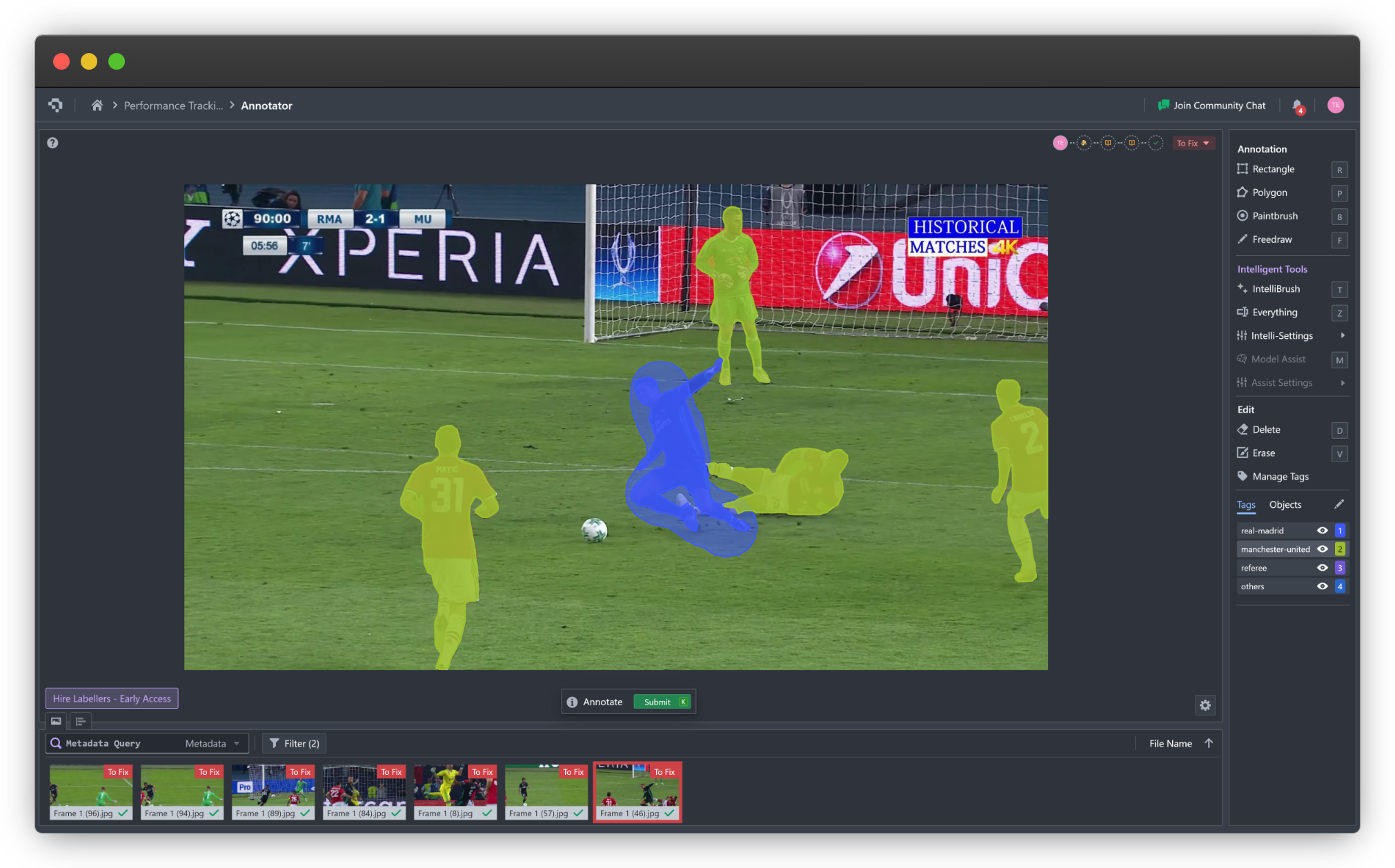

If all sets of annotations for an asset are rejected and the next stage in the workflow via the Reject route of the Consensus block is to assign another labeller to re-annotate the asset, the labeller will be able to view all past annotations from every labeller that had annotated that asset. The new labeller can choose to edit the old annotations, or to delete them and create new ones.

Annotator interface when all sets of annotations of an asset are rejected and sent for re-annotation.

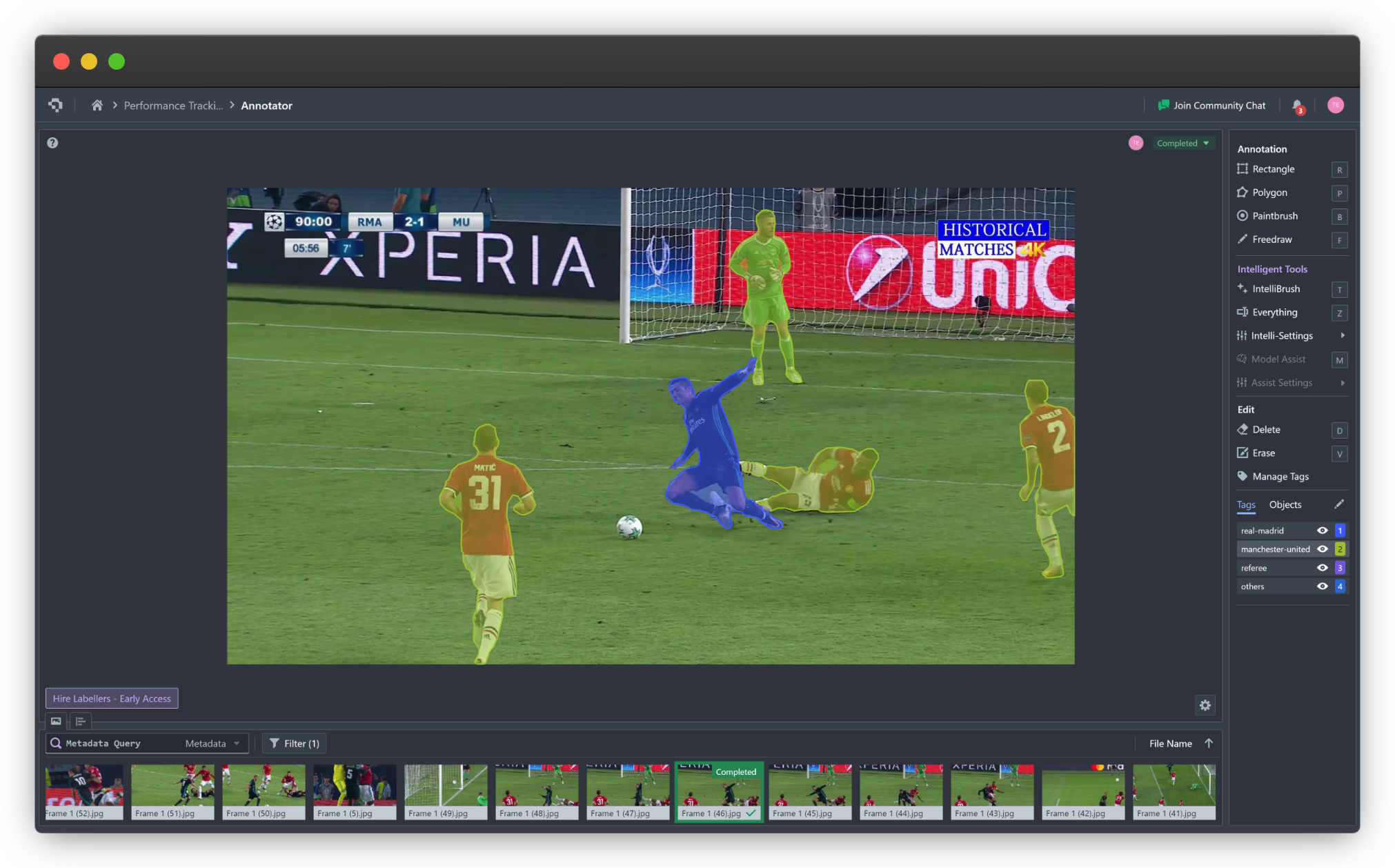

If a reviewer wishes to view a particular labeller’s annotations in Review Mode, simply click on their name. They can choose to accept that labeller’s annotations.

Consensus interface with a particular labeller’s annotations selected.

Only one set of annotations for each asset can be accepted to advance the asset to the next stage in the annotation workflow via the Accept route in the Consensus block. The remaining sets of annotations are irreversibly discarded when this happens, so do make sure that you are accepting a satisfactory set of annotations before committing the review.

Only one set of annotations can be accepted before moving on to the next stage in the annotation workflow.

The Accept All Assets button will automatically select the set of annotations to keep that has an average consensus score of at least 80%. If there are multiple sets above this threshold, the set with the highest average consensus score will be accepted. If none of the sets meet this criterion, all of them will be rejected.

Common Questions

Why do two highly similar annotations have low consensus scores?

This could suggest that there is a third annotator who either annotated incorrectly or missed out on an annotation. The consensus algorithm will penalise the scores of incorrect, missing, or redundant annotations, which will indirectly influence the scores of other annotations. This serves as a warning that a potential discrepancy or anomaly exists.

What happens if all annotations have high consensus scores?

The reviewer should only approve one set of annotations to pass through to the next stage. This is to ensure that the model does not receive duplicate annotations during training. Rejected annotations will not affect the aggregated consensus scores of each labeller in any way.

What happens if all annotations have low consensus scores?

The reviewer can reject the annotations, in which case the asset will be sent back for re-annotation depending on how the Annotation Automation workflow is set up. The asset will only move on to the next stage of the workflow if there is one set of approved annotations.

👋 Need help? Contact us via website or email

🚀 Join our Slack Community

💻 For more resources: Blog | GitHub | Tutorial Page

🛠️ Need Technical Assistance? Connect with Datature Experts or chat with us via the chat button below 👇

Updated 5 months ago